In November 2022, the intelligent dialogue robot model ChatGPT was launched. ChatGPT's continuous dialogue ability, strong comprehension, accuracy and creativity of answers made it quickly popular. In just two months, the number of users exceeded 100 million. The explosive breakthrough of AIGC (AI-Generated Content) models such as ChatGPT was due to the implementation of key technologies such as generative algorithms, pre-training models, and multimodal technology.

Behind the popularity of ChatGPT is the strong support of computing power

When OpenAI was working on GPT-3, it needed 4,000 cards for training, and GPT-4 needs 20,000 cards for training. Now, 10 million GPUs are connected together to train new models. Based on a rough estimate of NVIDIA's previous flagship GPU A100, the current price of a 40G A100 is 63,000 yuan. The training costs of GPUs alone for the three stages of OpenAI are 252 million yuan, 1.26 billion yuan, and 630 billion yuan.

As various giants rush to release their own AI large models, the market demand for AI servers has quietly increased sharply

The AI server can be divided into three parts according to the value of PCB demand:

Power supply module, hard disk, fan and other peripheral modules: high reliability requirements and high power.

We calculated the PCB value based on the DGX H100, an iconic product of NVIDIA, as a representative of AI servers.

GPU Board Tray

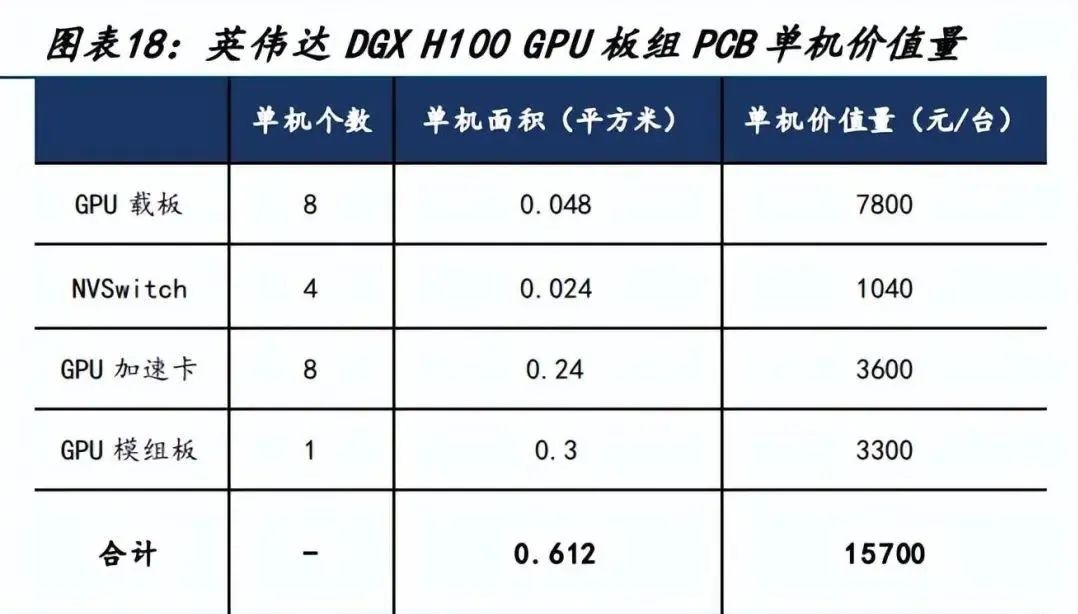

The GPU board set of the DGX H100 mainly includes four parts: GPU carrier board, NVSwitch, OAM, and UBB, with a total PCB value of 15,700 yuan per unit. Among them: the GPU carrier board, according to industry chain research, has a PCB value of approximately 150 US dollars per unit, and the H100 includes eight CPU carrier boards;

The carrier of NVSwitch is similar to a carrier board, with a single value of approximately $40 according to industry chain research. The H100 is equipped with a total of four;

OAM is referred to as GPU accelerator card in Chinese. H100 is equipped with 8 OAMs. According to industry research, it is expected to be a 5th-order HDI with a unit price of 15,000 yuan per square meter, which will bring a total value of 3,600 yuan per unit.

UBB is abbreviated as GPU module board in Chinese, which is used to carry the entire GPU platform. According to the DGX H100 bottom specifications and industry chain research, the unit price is 11,000 yuan/square meter, and the PCB value of UBB is 3,300 yuan.

CPU motherboard

It includes CPU carrier board, CPU mainboard and accessory board, among which functional accessory board includes system memory card, network card, expansion card, storage operating system drive board, with a total PCB value of 3,554 yuan/unit.

Among them: According to industry chain research, the specifications of CPU carrier boards and GPU carrier boards are similar, and the DGX H100 is equipped with 2 CPUs, so the value of a single machine is about 1,300 yuan;

The estimated CPU motherboard area is 0.38 square meters. According to industry chain research data, the DGX H100 motherboard adopts the PCIe5.0 bus standard, with a unit price of approximately 5,000 yuan per square meter. Calculating the value of the CPU motherboard alone is 1,900 yuan;

There are many types of matching boards. According to industry chain research, the specifications commonly used for matching boards are 8-10 layers, with a unit price of approximately 1,500 yuan per square meter, and a comprehensive single-machine value of approximately 354 yuan.

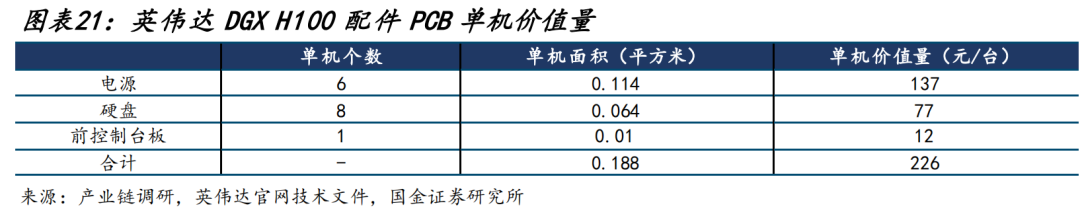

Power supply, hard disk and accessories

The total value of a single machine is 226 yuan. The unit price of accessories ranges from 1000 to 1500 yuan per square meter, mainly including 6 power supplies, a single PCB area of 0.019 square meters, 8 hard disks, a single PCB area of 0.008 square meters in a single hard disk, and a front console board area of about 0.010 square meters.

By integrating the three major sections of GPU board set, CPU template set, and accessories, we estimate that the PCB usage area of the DGX H100 whole machine is 1.428 square meters, and the PCB value of a single machine is 19,520 yuan.

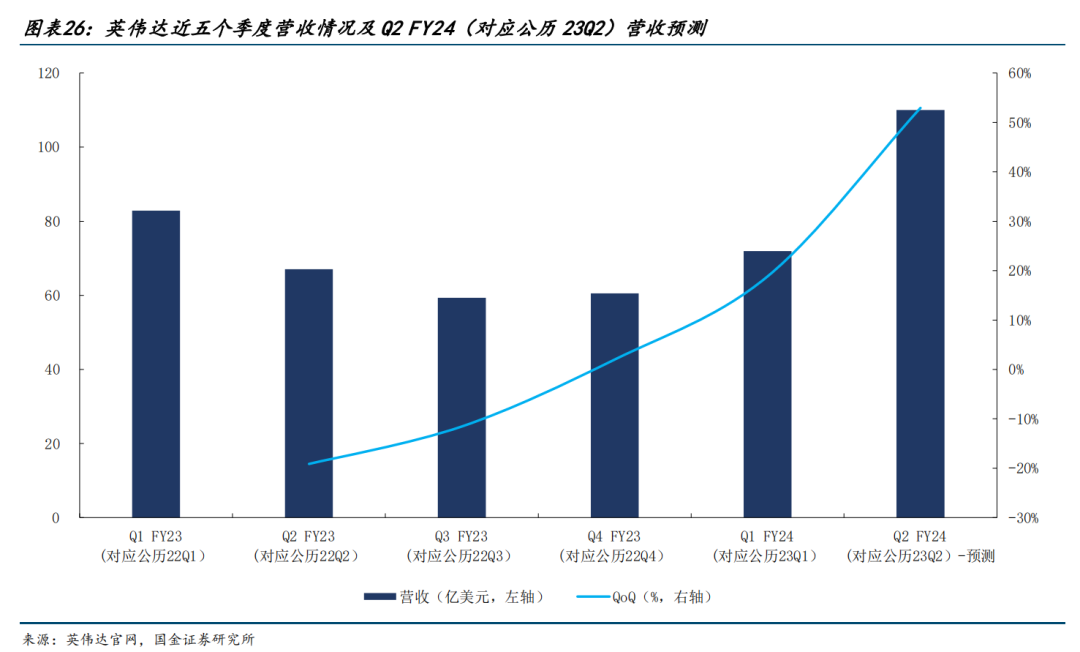

The AI server has reached the stage of accelerated development

From the performance tracking of NVIDIA, the revenue of NVIDIA's data center business is growing rapidly, with a year-on-year growth of +14% and a month-on-month growth of +18% in 23Q1. NVIDIA has given clear guidance that the overall revenue of data center business is expected to achieve a 53% growth in 23Q2, which indicates that AI servers will usher in a period of rapid growth.

The demand for AI servers will drive the development of PCB businesses related to servers

The value of AI server PCBs is 19,520 yuan per unit, which is 705% higher than the value of ordinary server PCBs at 2,425 yuan per unit. With the rapid growth of AI servers, the PCB industry will also experience expansion.

According to data and predictions from IDC, the global shipments of GPU servers from 2022 to 2025 are expected to be 3.19 million, 3.64 million, 4.12 million, and 4.63 million units, respectively. Based on industry research, the proportion of training GPU shipments reached 25% in 2022. Considering the rapid growth in the number of large models launched by cloud service providers and the demand for training computing power, we assume that the proportion of training GPU shipments will reach 35%, 40%, and 40% in 2023-2025, corresponding to 1.27 million, 1.65 million, and 1.85 million training GPU shipments. The corresponding shipments of inference GPU are expected to be 2.37 million, 2.47 million, and 2.78 million units.

As the main designer of AI server GPU solutions, NVIDIA's standard design for training servers and inference servers uses an 8-card solution. Therefore, we calculate based on 8 cards per server, resulting in an estimated shipment of AI servers at 4.6 million units in 2023-2025, with a growth rate of 17%-19%. Among them, AI training server shipments are expected to reach 1.6 million units in 2023-2025, while AI inference server shipments are expected to reach 3 million units in the same period.

According to the above calculations, the PCB market size for AI training servers and AI inference servers is expected to reach 6billion−7 billion and 3billion−3.5 billion in 2023-2025, with growth rates of 23%-17% and 17%-19%, respectively.

Article reference source:AI带来PCB增量,强α者率先受益-国金证券[樊志远,邓小路,刘妍雪]

Note: The above content is collated from the Internet, and the copyright belongs to the original author. If there is any infringement, please contact us for deletion.